Difference between revisions of "Jouska"

| Line 109: | Line 109: | ||

'''Live Processing Effects''' | '''Live Processing Effects''' | ||

| − | There are | + | There are six different effects used in the piece to process the violin: |

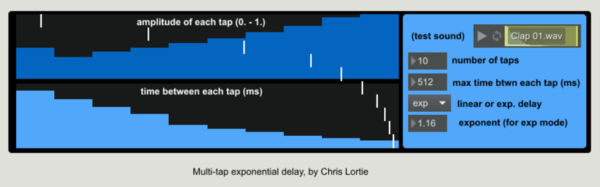

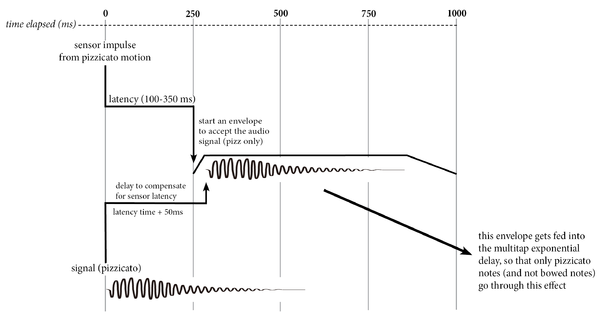

| − | ''Multi-tap Exponential Delay'' -- This is an effect I created | + | ''Multi-tap Exponential Delay'' -- This is an effect I created initially for use in my acousmatic compositions. The effect is essentially a multi-tap delay with the option to provide an exponential curve to the delay times. The delay time and amplitude of each tap can be configured by the user on the main GUI. In the piece, I used this effect exclusively to process pizzicato notes (and ONLY pizzicato notes, not what surrounds them). I built an algorithm that allowed the effect to compensate for the sensor's latency, so that I could capture the pizzicato notes by themselves and send them through the delay lines. |

[[File:Jouska-multitap-gui.PNG|frameless|upright=2.0|alt=Multitap Delay Main GUI]] | [[File:Jouska-multitap-gui.PNG|frameless|upright=2.0|alt=Multitap Delay Main GUI]] | ||

| Line 122: | Line 122: | ||

''Convolution'' -- I used live convolution with a sound file of jingle bells (the instrument, not the Christmas song) to create a shimmering effect. For this, I used the external [multiconvolve~], developed by [http://www.thehiss.org/ HISStools] as part of their Impulse Response Toolbox. | ''Convolution'' -- I used live convolution with a sound file of jingle bells (the instrument, not the Christmas song) to create a shimmering effect. For this, I used the external [multiconvolve~], developed by [http://www.thehiss.org/ HISStools] as part of their Impulse Response Toolbox. | ||

| − | ''Harmonizer'' -- I used a publicly-available Max for Live patch by jonasobermueller called jo.FFTHarmonizer 1.0. | + | ''Harmonizer'' -- I used a publicly-available Max for Live patch by jonasobermueller called jo.FFTHarmonizer 1.0. This transposes the pitch of the incoming signal in the frequency domain through up to four voices. I also have control over the panning and amplitude of each of these voices. |

''Crossfader'' -- I created as a crossfader subpatcher as a means to crossfade between two effects -- this proved as a useful means of utilizing the sensor data, and for creating a smooth fade from one cue's effects to the next. | ''Crossfader'' -- I created as a crossfader subpatcher as a means to crossfade between two effects -- this proved as a useful means of utilizing the sensor data, and for creating a smooth fade from one cue's effects to the next. | ||

| Line 128: | Line 128: | ||

''Sidechain Compressor'' -- I used a simple envelope follower created in [gen~] to create a sidechain compressor. Because it was coded in [gen~], the compressor was more efficient on the CPU, and was evaluated once every sample (48000 times per second), allowing for minimal latency. The compressor allowed me to fix balance issues between my violin processing and the fixed media files (which was especially problematic when dealing with live distortion). This way, the impacts of the fixed media were able to come through the texture. | ''Sidechain Compressor'' -- I used a simple envelope follower created in [gen~] to create a sidechain compressor. Because it was coded in [gen~], the compressor was more efficient on the CPU, and was evaluated once every sample (48000 times per second), allowing for minimal latency. The compressor allowed me to fix balance issues between my violin processing and the fixed media files (which was especially problematic when dealing with live distortion). This way, the impacts of the fixed media were able to come through the texture. | ||

| − | ''Limiters'' -- There were several limiters added to the patch to avoid feedback and clipping issues. I placed these | + | ''Limiters'' -- There were several limiters added to the patch to avoid feedback and clipping issues. I placed these after the mic input, after each of the effects, and right before my main output. |

| Line 142: | Line 142: | ||

Creating the score for the piece was a result of the following iterations: | Creating the score for the piece was a result of the following iterations: | ||

| − | + | - Drafting a formal outline [https://stanford.box.com/s/1720gxmxcrw956q9eey3yspscb0uqqhl (see page 1 of this PDF)] | |

| − | + | - Composing the MIDI piano/violin sequence [https://stanford.box.com/s/1720gxmxcrw956q9eey3yspscb0uqqhl (page 2)] | |

| − | + | - Drafting a contour sketch of the violin line [https://stanford.box.com/s/1720gxmxcrw956q9eey3yspscb0uqqhl (pages 3-8)] | |

| − | + | - Engraving the contour sketch into Finale. Some specific pitches were known at this point. [https://stanford.box.com/s/rcjk0r7jfpcv3vijsg5mcykqi1cjhtem (PDF here)] | |

| − | + | - Filling in the pitches for these contours. This was done by mapping my MIDI keyboard to a Max patch which interpreted the MIDI data and fed it through a seive of scales and set classes. I then adjusted the pitches to my liking and made it more idiomatic for violin playing (e.g. the inclusion of open strings in fast passages, playable double-stops, etc.). This draft of the score can be seen [https://stanford.box.com/s/vvx9v3an7f04ly350uzkvviirb2eppie here]. | |

| − | + | - Finally, after the electronics were completed, the score was updated to include small adjustments as well as the addition of the choreography in the second half of the piece. | |

| − | + | - [https://stanford.box.com/s/97eqpat0811u8cauwiindw4t9ffg3fx5 The most recent (although by no means final) version of the score can be found here]. | |

| Line 163: | Line 163: | ||

- A more nuanced focus on live balance, including more sidechaining between different effects and the sound files. As part of this, I'd like to make the compression threshold, ratio, and noise floor amounts more accessible from the main GUI. This will help compensate for the variability of performance venues and equipment used (like microphones) so that the sidechain compression can remain consistent across performances. | - A more nuanced focus on live balance, including more sidechaining between different effects and the sound files. As part of this, I'd like to make the compression threshold, ratio, and noise floor amounts more accessible from the main GUI. This will help compensate for the variability of performance venues and equipment used (like microphones) so that the sidechain compression can remain consistent across performances. | ||

| − | - As mentioned before, I will want to use bow duration data instead of bow energy data in many places. The bow energy values were not scaled in a way that matched well with Mari's gestures, but instead gave a more-or-less logarithmic curve of energy; because of this, the energy data was useful in detecting the onset of fast tremolo notes, but not the steady increase and decrease in bowing speed that I was looking for | + | - As mentioned before, I will want to use bow duration data instead of bow energy data in many places. The bow energy values were not scaled in a way that matched well with Mari's gestures, but instead gave a more-or-less logarithmic curve of energy; because of this, the energy data was useful in detecting the onset of fast tremolo notes, but not the steady increase and decrease in bowing speed that I was looking for. |

- More nuance added to my Multitap-Exponential-Delay effect, including panning over time | - More nuance added to my Multitap-Exponential-Delay effect, including panning over time | ||

- More interesting and nuanced effects applied to the harmonizer sections | - More interesting and nuanced effects applied to the harmonizer sections | ||

Revision as of 17:26, 19 June 2017

[project page for 220C course with Chris Chafe, Spring 2017]

Contents

Overview

Title: Jouska

Composer: Chris Lortie

Duration: 9'

Instrumentation: Violin and live electronics (including µgic glove sensor)

Premiere Performance: Mari Kimura at the 2017 SPLICE Institute on Wednesday, June 14th at 7:30pm in the Dalton Center Recital Hall, Western Michigan University.

Setup: Violin >> DPA clip-on mic >> Max/MSP on a laptop >> stereo output. During the piece, both fixed sound files and live processing of the violin input occur. Data from the violinist's glove sensor alter these effects and occasionally advance cues in the Max/MSP patch. There is no foot pedal involved in the piece.

Score and Recording

[score and recording of the premiere will be uploaded and linked here when they are finalized]

Program Notes

The word Jouska comes from the Dictionary of Obscure Sorrows, a compendium of invented words written by John Koenig that try to “give a name to emotions we all might experience but don’t yet have a word for.” Koenig defines Jouska as “a hypothetical conversation that you compulsively play out in your head…which serves as a kind of psychological batting cage where you can connect more deeply with people than in the small ball of everyday life, which is a frustratingly cautious game of change-up pitches, sacrifice bunts, and intentional walks.”

Circumstance

Jouska was written for the violinist Mari Kimura as part of a commissioned collaboration for the 2017 SPLICE Institute at Western Michigan University. The criteria for this commission only dictated that the piece should be around 7-9 minutes in length. After being introduced to my collaborator, Mari, I asked about the potential to make use of her µgic sensor, a glove sensor prototype she developed with Liubo Borissov at IRCAM. She was more than happy to accommodate this request and sent instructions through email about the nature of the data the sensor would provide. In early May, I coded for her a "precomposition patch," which included most of the live processing used in the piece; these effects were split into 11 different "presets," each of which utilized her sensor data in a specific way. This patch later influenced how I coded the final performance patch, and allowed me to better understand the results of the data-audio interaction. Mari and I finally met in person on June 11th at the SPLICE Institute; here, we tweaked the patch with some final adjustments for the data streams and addressed some balance issues with the live processing.

µgic sensor

The Data

Mari's µgic sensor was utilized as a major component in the piece, primarily to act as a bridge between the performative gestures inherent to her playing and the resulting processing of her sound. In this way, the data from the sensor was used to drive different parameter values in the live processing. The µgic sensor is built to track the following performative motions using a 9-axis accelerometer:

- 1) bow stroke duration - tracks the length of a bow stroke in 10s of milliseconds

- 2) pizzicato - a sforzando pizzicato motion can be reasonably traced

- 3) bow energy - the aggregate energy value for the entire sensor (on a scale from 0. to 1.)

Pitch tracking and amplitude/note-onset tracking were available as parameters to work with, although they do not require the use of the sensor glove. For pitch tracking, I used the IRCAM external, yin~.

Here is a video of Mari explaining sensor interactions in her piece Eigenspace.

Sensor Brainstorming

Before beginning the piece, I thought about what might be the most potent way to utilize the sensor data as an organic element in the piece. I wanted to use the sensor as a way to make the live electronics more congruent with the performative action. Certain movements cannot easily be tracked with amplitude or spectral algorithms alone. Mari is well known in the electroacoustic community to avoid the use of a foot pedal to trigger cues, particularly because they 1) destroy the illusion of interactivity, snapping the frame of reference towards the foot pedal itself for a brief moment, and 2) because the foot pedal does not hold an organic performative role that is embedded in how the performer plays their instrument. With the above arguments in mind, I brainstormed what sorts of interactive relations might fall under the criteria of:

1) events that would be difficult to align if there were structured as performer and fixed media playback (for example, a pizzicato motion could start or stop a sound file)

2) ways in which I could combine multiple elements together (such as pitch detection and bow duration) to create more interesting processing that does not become stale over time

3) evolutions of effects that would seem "inorganic" if they were triggered only with a foot pedal

--A list of brainstormed ideas for sensor-processing interaction can be found here. This document includes Mari's correspondence as well.

Final Results

Each of the three available data streams from the sensor were ultimately used in the piece. These uses included:

- using bow energy to crossfade between distortion and octave doubling

- using bow energy to control the amplitude of the harmonizer

- using bow duration to pitchshift notes for their individual durations

- using pizzicato motion to advance cues

- using pizzicato motion to start or end sound files

- using pizzicato motion to "capture" a pizzicato note and send it through a different effect than everything else

In addition to the sensor data, I also made use of the following interactions:

- using pitch tracking to advance cues or change effect parameters

- using silence detection to advance cues

- using amplitude tracking to change the "color" of my live distortion waveshaping

A snapshot of the data router in my Max patch can be viewed here. This router was created simply using [gate] objects, each of which was turned on and off over the course of the piece by the [pattr] object. I originally used the [router] object in combination with [matrixctrl] to control these data, but ran into several issues that were embedded in the [router] object itself; namely, the object was sending out both a control message as well as the data itself. Parsing this control message out of the stream seemed to be fruitless, since it was not consistent over time.

In retrospect, I would have had more success in using the bow duration data instead of the bow energy data in many places. The bow energy values were not scaled in a way that matched well with Mari's gestures, but instead gave a more-or-less logarithmic curve of energy; because of this, the energy data was useful in detecting the onset of fast tremolo notes, but not the steady increase and decrease in bowing speed that I was looking for. In future iterations of the patch, I will recode the bow energy correlations to be bow duration correlations instead.

The Max Patch

Interface and Cue System

The Max/MSP patch for the piece was constructed as a 16-cue system that either automatically or intuitively advances over the duration of the piece. I used the [pattr] object to store all sensor data and audio routing settings, and the [qlist] object to control all ramps, effects parameters, and triggered sound files. I ran into issues when using these in combination due to some simple order-of-operations flaws in my logic. Additionally, [qlist] is not ideal as a means of triggering ramps and soundfiles, since skipping to cues in rehearsal will play all previous cues. I managed to compensate for these issues with a few simple workarounds -- in future iterations of the patch, I will need to structure this logic a bit differently so that the cue system still complements my workflow but doesn't introduce these issues.

A few screenshots of the patch can be viewed here:

- Main GUI: Features the current cue number, a means of skipping to a new cue number for rehearsal, the data coming from the sensor, a record button for recording audio during performances, a description of each cue, level adjustments for the main outs/mic/sound files that can be saved, and a large slider which helps to coordinate timings within the piece.

- Effects GUI: Provides an interface to set individual levels for each of the effects and save them. Two effects are not show here because their levels need not be adjusted from the GUI (these being the crossfader and the sidechain compressor).

- Audio Router: I can route audio signals to and from whichever effects I like for a particular cue.

- Data Router: I can route sensor data to an effects parameter only when I need it.

Live Processing Effects

There are six different effects used in the piece to process the violin:

Multi-tap Exponential Delay -- This is an effect I created initially for use in my acousmatic compositions. The effect is essentially a multi-tap delay with the option to provide an exponential curve to the delay times. The delay time and amplitude of each tap can be configured by the user on the main GUI. In the piece, I used this effect exclusively to process pizzicato notes (and ONLY pizzicato notes, not what surrounds them). I built an algorithm that allowed the effect to compensate for the sensor's latency, so that I could capture the pizzicato notes by themselves and send them through the delay lines.

Distortion -- The distortion effect used in the piece was drawn from the stkr waveshaping toolkit (shared publicly on the Cycling74 forums). This effect uses [gen~] in combination with [genexpr] to change the waveshape of the signal for each incoming sample. The amplitude of the violin input signal is also used to "color" this distortion in real-time so that the effect does not have a flat timbre.

Convolution -- I used live convolution with a sound file of jingle bells (the instrument, not the Christmas song) to create a shimmering effect. For this, I used the external [multiconvolve~], developed by HISStools as part of their Impulse Response Toolbox.

Harmonizer -- I used a publicly-available Max for Live patch by jonasobermueller called jo.FFTHarmonizer 1.0. This transposes the pitch of the incoming signal in the frequency domain through up to four voices. I also have control over the panning and amplitude of each of these voices.

Crossfader -- I created as a crossfader subpatcher as a means to crossfade between two effects -- this proved as a useful means of utilizing the sensor data, and for creating a smooth fade from one cue's effects to the next.

Sidechain Compressor -- I used a simple envelope follower created in [gen~] to create a sidechain compressor. Because it was coded in [gen~], the compressor was more efficient on the CPU, and was evaluated once every sample (48000 times per second), allowing for minimal latency. The compressor allowed me to fix balance issues between my violin processing and the fixed media files (which was especially problematic when dealing with live distortion). This way, the impacts of the fixed media were able to come through the texture.

Limiters -- There were several limiters added to the patch to avoid feedback and clipping issues. I placed these after the mic input, after each of the effects, and right before my main output.

Fixed Media

The fixed media used in the piece was primarily used to aid in the rise and fall of dynamics, particularly in conjunction with the live processing (which proves to be much more difficult to control). There is one section, repeated twice with developments, that acts as a unique counterpart to this in which a MIDI violin and piano soundfile are played. In these sections, the performer is asked to "air-bow" to the sound file; this provides the Max/MSP patch with sensor data (specifically bow duration), which is in turn used to manipulate the tuning of the sound file in real-time.

There is an extended section of the piece in which the performer must "choreograph" their actions in alignment with a 2-minute sound file. Mari looks at the laptop screen to align these motions with impacts (she sees a slider going across the screen at the audio rate). This choreography is meant to look interactive as if the sound file were controlled by the sensor data; however, it is not interactive at all. This illusion must be maintained by the performer by executing these actions exactly in time with each impact OR slightly before (which suggests latency from the sensor).

The Score

Creating the score for the piece was a result of the following iterations:

- Drafting a formal outline (see page 1 of this PDF)

- Composing the MIDI piano/violin sequence (page 2)

- Drafting a contour sketch of the violin line (pages 3-8)

- Engraving the contour sketch into Finale. Some specific pitches were known at this point. (PDF here)

- Filling in the pitches for these contours. This was done by mapping my MIDI keyboard to a Max patch which interpreted the MIDI data and fed it through a seive of scales and set classes. I then adjusted the pitches to my liking and made it more idiomatic for violin playing (e.g. the inclusion of open strings in fast passages, playable double-stops, etc.). This draft of the score can be seen here.

- Finally, after the electronics were completed, the score was updated to include small adjustments as well as the addition of the choreography in the second half of the piece.

- The most recent (although by no means final) version of the score can be found here.

Looking Ahead

Some updates I want to make to the piece for future performances include:

- A more nuanced focus on live balance, including more sidechaining between different effects and the sound files. As part of this, I'd like to make the compression threshold, ratio, and noise floor amounts more accessible from the main GUI. This will help compensate for the variability of performance venues and equipment used (like microphones) so that the sidechain compression can remain consistent across performances.

- As mentioned before, I will want to use bow duration data instead of bow energy data in many places. The bow energy values were not scaled in a way that matched well with Mari's gestures, but instead gave a more-or-less logarithmic curve of energy; because of this, the energy data was useful in detecting the onset of fast tremolo notes, but not the steady increase and decrease in bowing speed that I was looking for.

- More nuance added to my Multitap-Exponential-Delay effect, including panning over time

- More interesting and nuanced effects applied to the harmonizer sections