Difference between revisions of "Q3osc: overview"

m |

m |

||

| Line 2: | Line 2: | ||

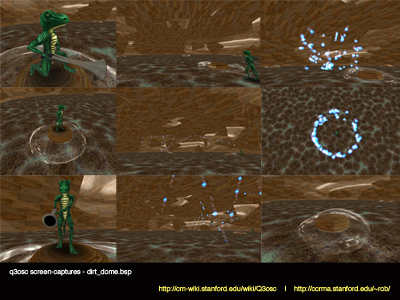

'''q3osc''' is a heavily modified version of the ioquake3 gaming engine featuring an integrated [http://www.audiomulch.com/~rossb/code/oscpack/ oscpack] implementation of Open Sound Control for bi-directional communication between a game server and a multi-channel ChucK audio server. By leveraging ioquake3’s robust physics engine and multiplayer network code with oscpack’s fully-featured OSC specification, game clients and previously unintelligent in-game weapon projectiles can be repurposed as behavior-driven independent OSC-emitting virtual sound-sources spatialized within a multi-channel audio environment for real-time networked performance. | '''q3osc''' is a heavily modified version of the ioquake3 gaming engine featuring an integrated [http://www.audiomulch.com/~rossb/code/oscpack/ oscpack] implementation of Open Sound Control for bi-directional communication between a game server and a multi-channel ChucK audio server. By leveraging ioquake3’s robust physics engine and multiplayer network code with oscpack’s fully-featured OSC specification, game clients and previously unintelligent in-game weapon projectiles can be repurposed as behavior-driven independent OSC-emitting virtual sound-sources spatialized within a multi-channel audio environment for real-time networked performance. | ||

| − | + | Within the virtual environment, performers can fire different colored projectiles at any surface in the rendered 3D world to produce various musical sounds. As each projectile contacts the environment, the bounce location is then used to spatialize sound across the multi-speaker sound field in the real-world listening environment. Currently in use with the Stanford Laptop Orchestra or [http://slork.stanford.edu SLOrk], q3osc is designed to be scaled to work with a distributed environment of multiple computers and multiple hemspherical speaker arrays. | |

| − | + | q3osc allows composers to program interactive performance environments using a combination of traditional gaming level-development tools and interactive audio processing softwares like ChucK, Supercollider, Pure Data or Max/MSP. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

<div style="vertical-align: top; float:right; margin:10px 0px 20px 30px;">http://ccrma.stanford.edu/~rob/q3osc/images/quintet_caps_400.png</div> | <div style="vertical-align: top; float:right; margin:10px 0px 20px 30px;">http://ccrma.stanford.edu/~rob/q3osc/images/quintet_caps_400.png</div> | ||

Revision as of 08:54, 30 May 2008

q3osc is a heavily modified version of the ioquake3 gaming engine featuring an integrated oscpack implementation of Open Sound Control for bi-directional communication between a game server and a multi-channel ChucK audio server. By leveraging ioquake3’s robust physics engine and multiplayer network code with oscpack’s fully-featured OSC specification, game clients and previously unintelligent in-game weapon projectiles can be repurposed as behavior-driven independent OSC-emitting virtual sound-sources spatialized within a multi-channel audio environment for real-time networked performance.

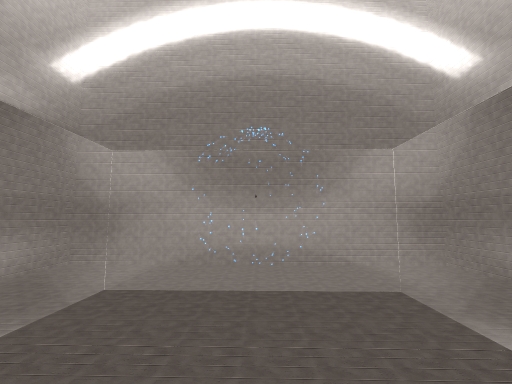

Within the virtual environment, performers can fire different colored projectiles at any surface in the rendered 3D world to produce various musical sounds. As each projectile contacts the environment, the bounce location is then used to spatialize sound across the multi-speaker sound field in the real-world listening environment. Currently in use with the Stanford Laptop Orchestra or SLOrk, q3osc is designed to be scaled to work with a distributed environment of multiple computers and multiple hemspherical speaker arrays.

q3osc allows composers to program interactive performance environments using a combination of traditional gaming level-development tools and interactive audio processing softwares like ChucK, Supercollider, Pure Data or Max/MSP.